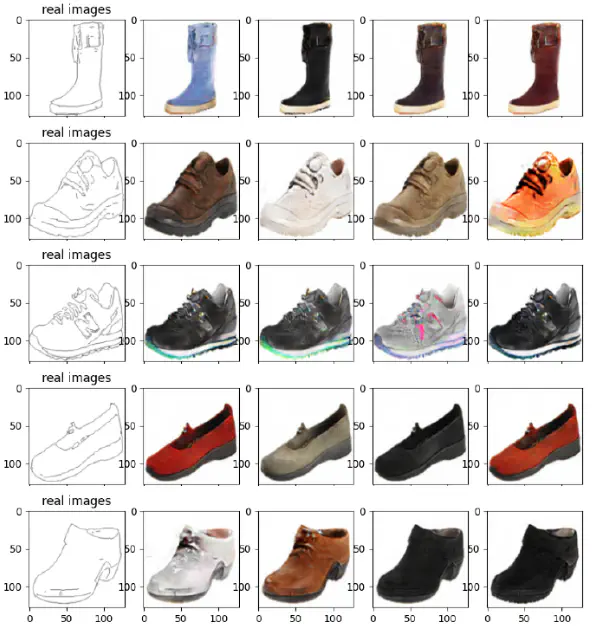

Image-to-image translation aims to transform images from one domain to have the characteristics of another domain while preserving the content representations. Generative adversarial networks (GAN) have made tremendous progress in recent years to enable photo-realistic image-to-image translation, which has applications in synthesis, restoration, and style transfer. In this project, we want to explore multimodal conditional synthesis based on BicycleGAN. While most GAN-based approaches suffer from mode collapse in conditional synthesis, BicycleGAN proposes a hybrid model that encourages invertible mapping between the output and the latent code, which should improve generation diversity while maintaining realism. We aim to further improve BicycleGAN’s performance through architectures and loss function modifications.

We find that by combining multiple objectives for encouraging a bijective mapping between the latent and output spaces, we obtain results that are more realistic and diverse. We observe a trade-off to some extent between realism and diversity with increased generator and discriminator complexity. However, due to the unstable nature of GAN training, it is relatively hard to achieve consistency.